I mean they were trained on copyrighted material and nothing has been done about that so…

So abolish copyright law entirely instead of only allowing theft when capitalists do it.

It only seems to make a difference when the rich ones complain.

I should create an AI start up and torrent all the content.

On the other hand copyright laws have been extended to insane time lengths. Sorry but your grandkids shouldn’t profit off of you.

How funny this is gonna get when AI copyrights Nintendo stuff. Ah man I got my popcorn ready.

Can the rest of us please use copyrighted material without permission?

You already likely do. Every book you read and learned from is copyrighted material. Every video you watch on YouTube and learned from is copyrighted material.

The “without permission” is not correct. You’ve got permission to watch/listen/learn from it by them releasing it and you paying any applicable subscription etc costs. AI does the same.

By “use” I actually meant “reproduce portions of” and “make derivative works of”

As long as you use AI to generate it

The AI just gives you a 1:1 copy of it’s training data, which is the material. Viola.

God I hope so.

Greed have no age.

I’m naming my torrent client “AI” and now I have the right to download a car.

While I understand their position, I disagree with it.

Training AI on copyrighted data - let’s take music for example - is no different to a kid at home listening to Beatles songs all day and using that as inspiration while learning how to write songs or play an instrument.

You cant copyright a style of music, a sound, or a song structure. As long as the AI isn’t just reproducing the copyrighted content “word for word”, I don’t see what the issue is.

Does the studio ghibli artist own that style of drawing? No, because you can’t own something like that. Others are free to draw whatever they want while replicating that style.

a) An AI is not a person. We do not WANT an AI to be regarded as equal to a person under law. That’s a terrible idea

b) How is that AI training material being generated? Did they buy copies of every copyrighted song and every movie by every artist to include in the training data? If it’s music and streamed, are they paying the artist royalties based on every “play” the AI is processing during training the same as of a human played the song over and over again to learn a long? How about sheet music? Because if a PERSON is learning from training material, the license for sheet music and training materials is different than a playable copy of the same work.

I’m willing to bet that the AI companies didn’t even pay for the regular copies of works much less ones licensed for use as training materials for humans, but it didn’t matter because an AI is an advanced algorithm and NOT A HUMAN.

a) No one is suggesting AI be regarded as equal to a person under law though?

b) if the music is being streamed then it’s up to the streaming company to pay the artists royalties. I have Spotify and I don’t pay the artists - Spotify does.

If the argument is “the people feeding data into the AI illegally acquired the content” then sure, argue that and prosecute them for piracy or whatever. That’s not the argument that is being made though.

if i learn a book by heart, and then go around making money by reciting it, then that’s illegal. same thing.

Some company’s own some wildly absurd things, copyright is only enforced if you have the money to do your own policing sometimes in multiple continents

Even if it benefits big players more, copyright still benefits small artists

They do, but the point still stands. No one “owns” what these AIs are learning. That’s what they’re doing - learning, and they’re learning from copyrighted material the same way people learn from copyrighted material. The copyright holders - mainly artists - are just super upset about it because it’s showing that what they provide can be easily learned and emulated by computers.

They’re the horse and carriage sellers when cars were invented.

I’d rather people not profiting off copyrighted work be permitted than those who profit off it

I read this as pro-piracy and anti-AI, generally speaking, since the former is for personal use (art should be free to share and enjoy) while the latter is for commercial use (you should not be allowed to freely profit off the work of artists).

I intended it that way

AI for me but not for thee

Doesn’t work for any ai because non-commercial ai companies get sold and become for profit with a trained model

But you, casual BitTorrent, eDonkey (I like good old things) and such user, can’t.

It’s literally a law allowing people doing some business violate a right of others, or, looking at that from another side, making only people not working for some companies subject to a law …

What I mean - at some point in my stupid life I thought only individuals should ever be subjects of law. Where now the sides are the government and some individual, a representative (or a chain of people making decisions) of the government should be a side, not its entirety.

For everything happening a specific person, easy to determine, should be legally responsible. Or a group of people (say, a chain from top to this specific one in a hierarchy).

Because otherwise this happens, the differentiation between a person and a business and so on allows other differentiation kinds, and also a person having fewer rights than a business or some other organization. And it will always drift in that direction, because a group is stronger than an individual.

And in this specific case somebody would be able to sue the prime minister.

OK, it’s an utopia, similar to anarcho-capitalism, just in a different dimension, in that of responsibility.

You’re talking about illegally acquiring content, which isn’t the same as training AI off legally acquired/viewed content.

Says the dude who completed a song using AI.

He used AI to Isolate John’s voice from an old Demo. As long as a percentage of the proceeds of the song goes to John’s estate, I don’t think it’s quite the same as AI ripping off artists.

No it isn’t but I still refuse to choose on purpose to listen to that song. I don’t even know the name.

EDIT: Just to be clear, I can’t stop from hearing the song out in the wild. However I will not seek it out. I just do not want to hear a song purposefully made with AI in such a manner. I’m still bitter that LOVE won a Grammy over the soundtrack to Across the Universe. They only gave the award to LOVE because George Martin was involved in it.

Why would you “Now and Then” know the song name?

I didn’t read the article. I will admit this. I am only reacting to the headline.

In theory, could you then just register as an AI company and pirate anything?

Well no, just the largest ones who can pay some fine or have nearly endless legal funds to discourage challenges to their practice, this bring a form of a pretend business moat. The average company won’t be able to and will get shredded.

What fine? I thought this new law allows it. Or is it one of those instances where training your AI on copyrighted material and distributing it is fine but actually sourcing it isn‘t so you can‘t legally create a model but also nobody can do anything if you have and use it? That sounds legally very messy.

You’re assuming most of the commentors here are familiar with the legal technicalities instead of just spouting whatever uninformed opinion they have.

No, because training an AI is not “pirating.”

If they are training the AI with copyrighted data that they aren’t paying for, then yes, they are doing the same thing as traditional media piracy. While I think piracy laws have been grossly blown out of proportion by entities such as the RIAA and MPAA, these AI companies shouldn’t get a pass for doing what Joe Schmoe would get fined thousands of dollars for on a smaller scale.

In fact when you think about the way organizations like RIAA and MPAA like to calculate damages based on lost potential sales they pull out of thin air training an AI that might make up entire songs that compete with their existing set of songs should be even worse. (not that I want to encourage more of that kind of bullshit potential sales argument)

The act of copying the data without paying for it (assuming it’s something you need to pay for to get a copy of) is piracy, yes. But the training of an AI is not piracy because no copying takes place.

A lot of people have a very vague, nebulous concept of what copyright is all about. It isn’t a generalized “you should be able to get money whenever anyone does anything with something you thought of” law. It’s all about making and distributing copies of the data.

This isn’t quite correct either.

The reality is that there’s a bunch of court cases and laws still up in the air about what AI training counts as, and until those are resolved the most we can make is conjecture and vague moral posturing.

Closest we have is likely the court decisions on music sampling and so far those haven’t been consistent, and have mostly hinged on “intent” and “affect on original copy sales”. So based on that logic whether or not AI training counts as copyright infringement is likely going to come down to whether or not shit like “ghibli filters” actually provably (at least as far as a judge is concerned) fuck with Ghibli’s sales.

Where does the training data come from seems like the main issue, rather than the training itself. Copying has to take place somewhere for that data to exist. I’m no fan of the current IP regime but it seems like an obvious problem if you get caught making money with terabytes of content you don’t have a license for.

the slippery slope here is that you as an artist hear music on the radio, in movies and TV, commercials. All this hearing music is training your brain. If an AI company just plugged in an FM radio and learned from that music I’m sure that a lawsuit could start to make it that no one could listen to anyone’s music without being tainted.

That feels categorically different unless AI has legal standing as a person. We’re talking about training LLMs, there’s not anything more than people using computers going on here.

A lot of the griping about AI training involves data that’s been freely published. Stable Diffusion, for example, trained on public images available on the internet for anyone to view, but led to all manner of ill-informed public outrage. LLMs train on public forums and news sites. But people have this notion that copyright gives them some kind of absolute control over the stuff they “own” and they suddenly see a way to demand a pound of flesh for what they previously posted in public. It’s just not so.

I have the right to analyze what I see. I strongly oppose any move to restrict that right.

Publically available =/= freely published

Many images are made and published with anti AI licenses or are otherwise licensed in a way that requires attribution for derivative works.

The problem with those things is that the viewer doesn’t need that license in order to analyze them. They can just refuse the license. Licenses don’t automatically apply, you have to accept them. And since they’re contracts they need to offer consideration, not just place restrictions.

An AI model is not a derivative work, it doesn’t include any identifiable pieces of the training data.

And what of the massive amount of content paywalled that ai still used to train?

If it’s paywalled how did they access it?

It’s also pretty clear they used a lot of books and other material they didn’t pay for, and obtained via illegal downloads. The practice of which I’m fine with, I just want it legalised for everyone.

So streaming is fine but copying not

Streaming involves distributing copies so I don’t see why it would be. The law has been well tested in this area.

It’s exploiting copyrighted content without a licence, so, in short, it’s pirating.

“Exploiting copyrighted content” is an incredibly vague concept that is not illegal. Copyright is about distributing copies of copyrighted content.

If I am given a copyrighted book, there are plenty of ways that I can exploit that book that are not against copyright. I could make paper airplanes out of its pages. I could burn it for heat. I could even read it and learn from its contents. The one thing I can’t do is distribute copies of it.

It’s about making copies, not just distributing them, otherwise I wouldn’t be able to be bound by a software eula because I wouldn’t need a license to copy the content to my computer ram to run it.

The enforceability of EULAs varies with jurisdiction and with the actual contents of the EULA. It’s by no means a universally accepted thing.

It’s funny how suddenly large chunks of the Internet are cheering on EULAs and copyright enforcement by giant megacorporations because they’ve become convinced that AI is Satan.

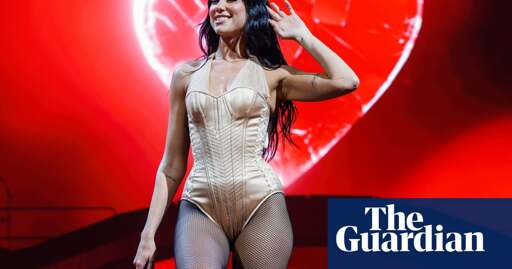

What’s a Dua Lipa?

It’s like the goal is to bleed culture from humanity. Corporate is so keep on the $$$ they’re willing to sacrifice culture to it.

I’ll bet corporate gets to keep their copyrights.

Absolute fastest way to kill this shit? Feed the entire Disney catalog in and start producing knockoff Disney movies. Disney would kill this so fast.

Or Nintendo.

With a mercenary death squad, probably.

That’s exactly what i was just thinking.

Where’s Disney in all of this?https://futurism.com/the-byte/disney-mocked-fake-cgi-actors-crowd-scene

Using AI to cook up some fake actors as of a couple years ago.

Probably getting in on it tbh

Good point. I wouldn’t be surprised if they have deals with all the ai companies.

The record companies already have all the data and all the rights. Petitions like these are meant to rig the game in their favor, so we get the official Warner Music AI at a high price point with licensing fees, and anything open source is deemed illegal and cant be used in products.

If you’re on the side that stands with Disney, you are probably on the wrong one.