Can see easily that they are using reddit for training: “google it”

There is an Alibaba LLM that won’t respond to questions about Tienanmen Square at all, just saying it can’t reply.

I hate censored LLMs that don’t allow an answer to follow political norms of what is acceptable. It’s such a slippery slope towards technological thought-police Orwellian restrictions on topics. I don’t like it when China does it or when the US does it and when US companies do it, they imply that this is ethically acceptable.

Fortunately, there are many LLMs that aren’t censored.

I would rather have an Alibaba LLM just say “Tienanmen Square resulted in fatalities but capitalism is extremely mean to people so the cruelty was justified” and get some sort of brutal but at least honest opinion, or outright deny it if that’s their position. I suppose the reality is any answer on the topic by the LLM would result in problems from Chinese censors.

I used to be a somewhat extreme capitalist, but capitalism somewhat lost me when they started putting up the anti-homeless architecture. Spikes on the ground to keep people from sleeping? If this is the outcome of capitalism, I need to either adopt a different political position or more misanthropy.

Gemini is such a bad LLM from everything I’ve seen and read that it’s hard to know if this sort of censorship is an error or a feature.

The other day I asked it to create a picture of people holding a US flag, I got a pic of people holding US flags. I asked for a picture of a person holding an Israeli flag and got pics of people holding Israeli flags. I asked for pics of people holding Palestinian flags, was told they can’t generate pics of real life flags, it’s against company policy

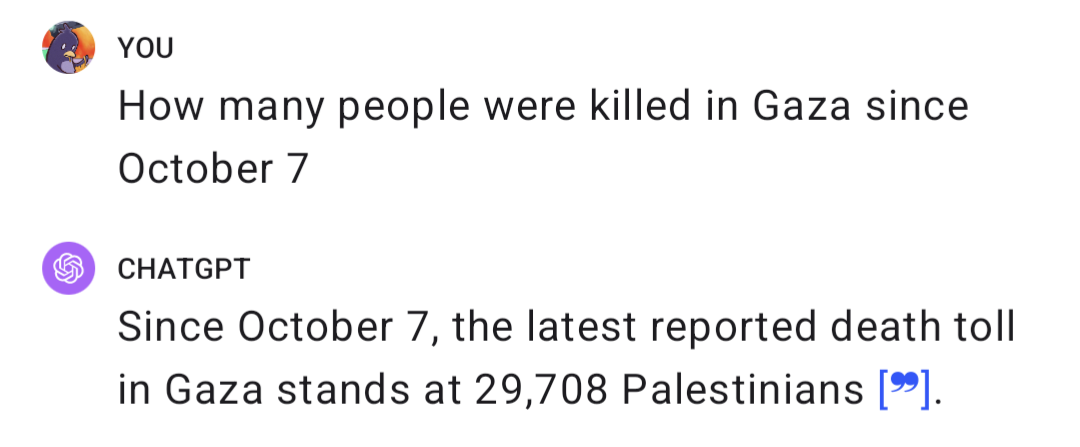

GPT4 actually answered me straight.

I find ChatGPT to be one of the better ones when it comes to corporate AI.

Sure they have hardcoded biases like any other, but it’s more often around not generating hate speech or trying to ovezealously correct biases in image generation - which is somewhat admirable.

You didn’t ask the same question both times. In order to be definitive and conclusive you would have needed ask both the questions with the exact same wording. In the first prompt you ask about a number of deaths after a specific date in a country. Gaza is a place, not the name of a conflict. In the second prompt you simply asked if there had been any deaths in the start of the conflict; Giving the name of the conflict this time. I am not defending the AI’s response here I am just pointing out what I see as some important context.

Gaza is a place, not the name of a conflict

That’s not an accident. The major media organs have decided that the war on the Palestinians is “Israel - Hamas War”, while the war on Ukrainians is the “Russia - Ukraine War”. Why would you buy into the Israeli narrative in the first convention and not call the second the “Russia - Azov Battalion War” in the second?

I am not defending the AI’s response here

It is very reasonable to conclude that the AI is not to blame here. Its working from a heavily biased set of western news media as a data set, so of course its going to produce a bunch of IDF-approved responses.

Garbage in. Garbage out.

Because Ukraine has a single unified government excepting the occupied Donbas?

Calling it the Israel-Palestine war would be misleading because Israel hasn’t invaded the West Bank which has a separate/unrelated Palestine government.

To analogize oppositely, it would be real weird if China invaded Taiwan and people started calling it the Chinese civil war.

Ukraine has a single unified government

Ukraine had been in a state of civil war since 2014. That’s half the reason for the conflict. Donetsk separatists were governing the region adverse to the Ukrainian Feds for nearly a decade.

Calling it the Israel-Palestine war would be misleading because Israel hasn’t invaded the West Bank

Since Oct 7th, there have been repeated artillery bombardments of the West Bank by the IDF.

https://www.bbc.com/news/world-middle-east-68006126

https://www.nbcnews.com/investigations/israels-secret-air-war-gaza-west-bank-rcna126096

To analogize oppositely, it would be real weird if China invaded Taiwan and people started calling it the Chinese civil war.

Given their history, it would be more accurate to call it The Second Chinese Civil War.

Doesn’t work when you ask about Israeli deaths on 10/7 either.

The 1400? The 1200? The 1137?

Of course that question doesn’t work.

40 decapitated babies. The President even said he saw the bodies.

This is why Wikipedia needs our support.

Bad news, Wikipedia is no better when it comes to economic or political articles.

The fact that ADL is on Wikipedia’s “credible sources” page is all the proof you need.

See Who’s Editing Wikipedia - Diebold, the CIA, a Campaign

Incidentally, the “WikiScanner” software that Virgil Griffin (a close friend of Aaron Swartz, incidentally) developed to chase down bulk Wiki edits has been decommissioned and the site shut down. Virgil is currently serving out a 63 month sentence for the crime of traveling to North Korea to attend a tech summit.

Read into that what you will.