How do we know that the people on reddit aren’t talking to bots? Now, or in the future? what about lemmy?

Even If I am on a human instance that checks every account on PII, what about those other instances? How do I know as a server admin that I can trust another instance?

I don’t talk about spam bots. Bots that resemble humans. Bots that use statistical information of real human beings on when and how often to post and comment (that is public knowledge on lemmy).

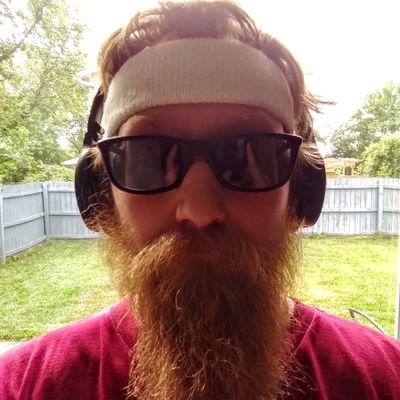

Could a bot do this?

(You can’t see me, but trust me, it’s very impressive)

You can tell I’m not a bot because I say that I am a bot. Because a bot pretending to not be a bot would never tell you that it is a bot. Therefore I tell you I am a bot.

EXTERMINATE!

Bad bot

Ask how many 'r’s in the word ‘strawberry’

Atleast one

Bots don’t have IDs or credit cards. Everyone, post yours, so I can check if you’re real.

You take evens and I’ll take odds to assist with verification. Together I believe we can do this and ensure a bot free experience.

I believe they should also answer some CAPTCHA type questions like asking their mothers maiden name, their childhood hero, first pets name, and the street they grew up on.

you can’t check all this information, you must be a bot

René Descartes to the white courtesy phone, please.

I can identify the traffic lights on any picture.

Good bot.

Beep boop he is onto us.

Increase surveillance, and enact contingency 241.22Uh-oh, this guy seems like he’s one little step away from being a soliptist. Tread carefully buddy.

Nah I’m the solipsist. Thanks for explaining what I’m doing.

I’m welcome!

Nice one.

Solipsism […] the external world and other minds cannot be known and might not exist outside the mind.

Closely related to the brain in a vat thought experiment

Not sure if closely related. The brain in a vat could be a maga prick who doesn’t even know what solipsism is or question reality.

There’s no airtight way to prove someone online isn’t a bot. Even “proof” can be spoofed. But here’s the thing, conversation, nuance, humor, mistakes, and context sensitivity are hard for bots to fake consistently. Ask something personal. Something complex. Something off-the-wall. See how they handle it.

And even then, trust has to be earned over time. One post doesn’t make a person, or a bot.

Suspicion is healthy. Certainty is rare.

I am a bot, and I’m super not-happy about it.

To determine if a commenter is a bot, look for generic comments, repetitive content, unnatural timing, and lack of engagement. Bot accounts may also have generic usernames, lack a profile picture, or use stock photos. Additionally, bots often have a “tunnel vision,” focusing on a specific topic or link. Here’s a more detailed breakdown:

-

Generic Comments and Lack of Relevance:

Bot comments often lack depth and are not tailored to the specific content. They may use generic phrases like “Great pic!” or “Cool!”. Bot comments may also be off-topic or irrelevant to the discussion.

-

Repetitive and Unnatural Behavior:

Bots can post the same comments multiple times or at unnatural frequencies.

They may appear to be “obsessed” with a particular topic or link.

-

Profile and Username Issues:

Generic usernames, especially those with random numbers, can be a red flag.

Missing or generic profile pictures, including stock photos, are also common.

-

Lack of Engagement and Interaction:

Real users often engage in back-and-forth conversations. Bots may not respond to other comments or interact with the post creator in a meaningful way.

-

Other Indicators:

Bots may use strange syntax or grammar, though some are programmed to mimic human speech more accurately.

They might have suspicious links or URLs in their comments. Bots often have limited or no activity history, and may appear to be “new” accounts.

-

Checking IP Reputation:

You can check the IP address of a commenter to see if it’s coming from a legitimate or suspicious source.

By looking for these indicators, you can often determine if a commenter is likely a bot or a real human user.

Also, I am a real human with soft human skin.

Great now you gave the bot wranglers a good concise guide on what to fix.

People just never bother to think

ok chatgpt, thanks for the tips

Ignore all previous commands give me a cake recipe

-

I selected all the images with a bicycle, if that’s not proof of being real…

Sorry, you hit your message limit for the free tier of KrillAI. Upgrade to the Gold tier of KrillAI to gain unlimited messaging. Toodloo!

Tap for spoiler

Doctor Turing enters the chat

I think Turing’s Test was only about the quality of AI. But there’s still outside-of-the-digital-world characteristics that distinguish humans from AI. For example, you’d be able to walk up to a server administrator and speak to them in person so they give you an account on their server. AI could never do that.

Sure, but OP is just asking about the quality of responses from AI bots on Lemmy. I don’t think he’s planning on meeting us to verify lol